Juntao Ren

I am a PhD student at Stanford University. I enjoy thinking about how we can enable robots to learn and reason over time in the physical world. I am grateful to be supported by the Knight-Hennessy Fellowship and the NSF Graduate Research Fellowship.

I completed my undergrad in Computer Science and Mathematics at Cornell University, where I am grateful to have worked with Prof. Sanjiban Choudhury. I've also spent time at 1X Technologies working on World Models.

Please feel free to reach out!

Publications

* indicates equal contribution

A Smooth Sea Never Made a Skilled SAILOR: Robust Imitation via Learning to Search

TL;DR — We train both world and reward models from demonstration data, giving the agent the ability to reason about how to recover from mistakes at test time.

Motion Tracks: A Unified Representation for Human-Robot Transfer

TL;DR — We propose a unified action space by representing actions as 2D trajectories on an image, enabling robots to directly imitate from cross-embodiment datasets.

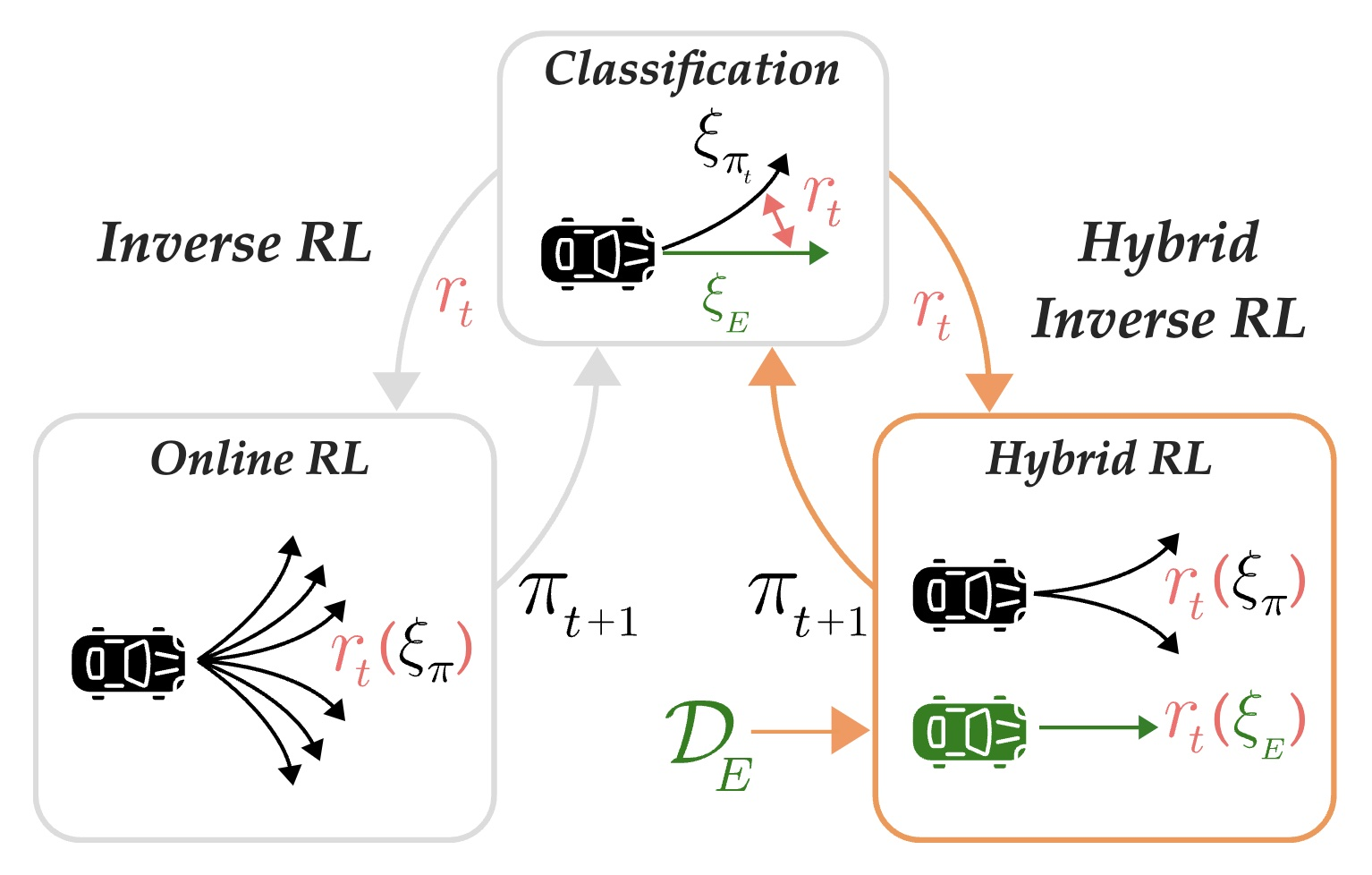

Hybrid Inverse Reinforcement Learning

Paper · Website · Code · Video

TL;DR — We show that training on both expert and learning data can provably speed up interactive imitation learning in the absence of rewards, for both model-free and model-based algorithms.

MOSAIC: A Modular System for Assistive and Interactive Cooking.

TL;DR — We build a top-down modular system to allow for human-robot collaboration within the kitchen using high-level planners, low-level visuomotor policies, and human-motion forecasting.

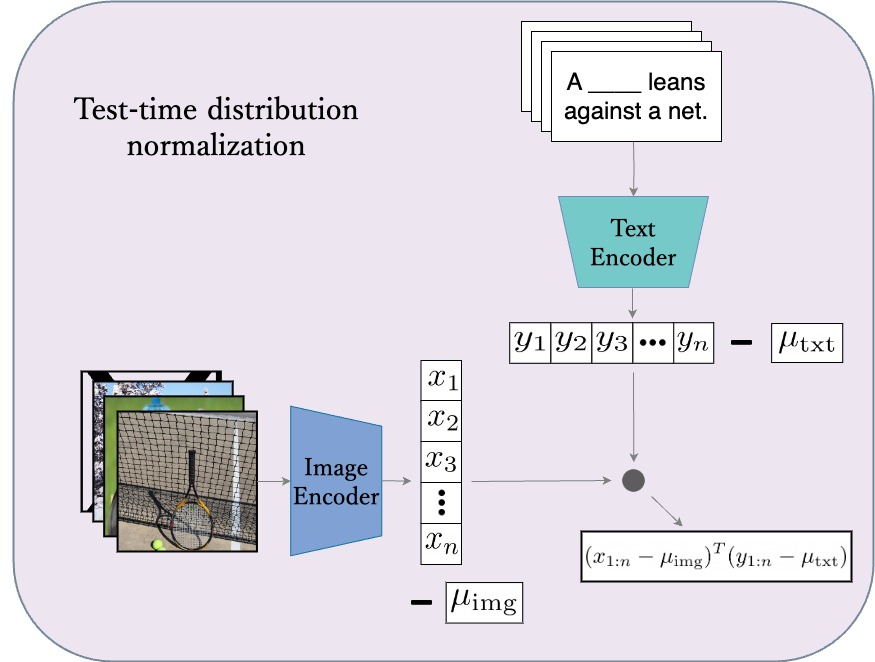

Test-Time Distribution Normalization for Contrastively Learned Visual-language Models.

TL;DR — We prove that subtracting the mean of the image and language embeddings at test-time from each sample better aligns with the training-objective and improves performance.